What does Snowflake's arctic embedding model announcement tell us about Snowflake's abilities in the LLM world?

Snowflake last week, relatively quietly, announced a new embedding model "family" arctic-embed. Rather than a technical review, this post will discuss the implications of the move, strategically, and provide some color around what I believe Snowflake is trying to do.

I'll break it down in the following way:

What did they announce?

What does it mean?

What's missing from the announcement?

What does this suggest for the future?

So, what'd they announce?

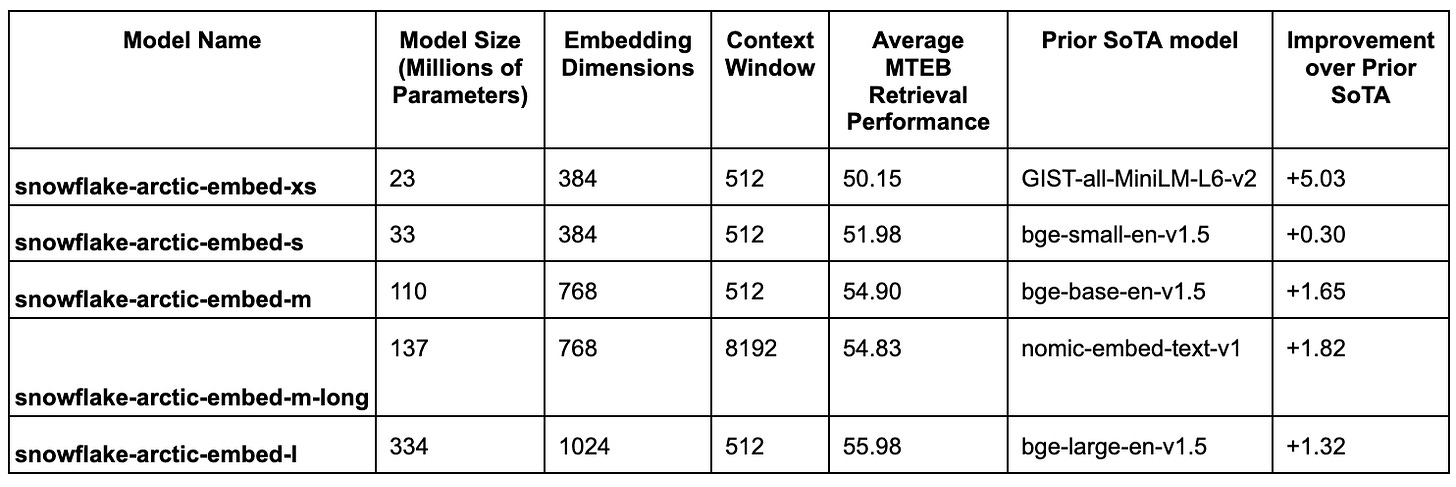

Snowflake announced 5 embedding models of various sizes. These embedding models are all state of the art in performance along the MTEB benchmark in the retrieval category.

There's nuance here, because it's basically a single column in the MTEB leaderboard. They included the following in their post:

As a side note, I'm a little surprised they didn't get us a bar graph? The table is a little terse and doesn't "pop".

A bar graph of:

Existing best model (grey or red)

Snowflake model (blue)

Would probably have looked a lot better.

What does this mean?

Snowflake wants to tell 4 stories.

Snowflake can build neural network models.

Snowflake data processing helps with building these tools.

Customers should consider Snowflake when performing retrieval tasks.

Snowflake has the talent to seize the opportunity in AI.

Let's break each of these down in a bit more detail.

Snowflake can build neural network models.

This story is one that Snowflake, if they want to survive in the AI era, needs to start telling.

No big surprise here.

If I read the post correctly here's what I think they did:

Took the best base models that they could find:

We would also be remiss to say that we did not build on the shoulders of giants, and in our training, we leverage initialized models such as (bert-base-uncased, nomic-embed-text-v1-unsupervised, e5-large-unsupervised, sentence-transformers/all-MiniLM-L6-v2).

Fine-tuned those with the same data (?) to improve the models, focusing particularly on ensuing that hard negative cases were used.

At a high level, we found that improved sampling strategies and competence-aware hard-negative mining could lead to massive improvements in quality.

Combined that data with web search data and fine tuned some more.

When our findings were combined with web search data and a quick iteration loop to gradually improve our model until the performance was something we were excited to share with the broader community.

Iterated until they were SOTA and had something to write about.

This whole process is interesting. This feels a little bit like p-hacking but everyone does it - so draw your own conclusions. Basically, -"hey, let's find something to publish." They've got money to throw at this problem, they just need to ship something.

They did mention some other "tricks" in their medium post on the subject but none that stood out.

Snowflake data processing helps with building these models

This is pretty straightforward. They're telling customers that Snowflake can help prepare data for training. This isn't really anything new, I think they're just trying to position in the customer's POV that "hey, you already use us for BI we can do RAG too!"

They didn't elaborate in the announcement post but did another post on Medium but it was mostly controlling negative cases and controlling what goes into batches.

Again, nothing revolutionary, but notable that they're trying to bring it all together.

Customers should consider Snowflake when performing retrieval tasks

If you take a look at the keywords (highlighted in the screenshot below), Snowflake is trying to get that SEO juice for retrieval.

You can see that Snowflake is getting busy trying to rank for embeddings, retrieval, and models. They cannot lose out on this market and if they don't have a strong embedding offering, they're in trouble.

Snowflake has the talent to build upon these problem sets.

I believe this point is much less relevant to customer but to Wall Street.

These impressive embedding models directly implement the technical expertise, proprietary search knowledge, and research and development that Snowflake acquired last May via Neeva. (source)

The stock has dropped quite a bit over the past couple of months and they need to tell a story that Neeva was more than just a CEO acqui-hire.

They want to position that Neeva wasn't just a CEO hire, it was that Neeva had all this magic sauce that Snowflake can now integrate into the Data Cloud. That was in the acquisition announcement post and maybe they're just now getting to it.

What's missing from the announcement?

$SNOW is in a tough spot. The stock is down. Net revenue retention is, and has been, trending in the wrong direction and they're not well positioned in the AI era. This post should seek to change that but there were a couple of notable omissions:

Customers are now able to fine tune their own embeddings

That the training infrastructure ran on Snowflake

That the training was complicated

That it's generally available

Customers are now able to fine tune their own embeddings

There was no announcement, like in the case of DBRX, of customer's being able to train their own embeddings.

This is notable, to me, it says that they aren't ready to take this problem on. Databricks is running a training on how to do just that at their Data + AI summit.

I believe Snowflake would if they could, but they probably aren't ready.

Additionally,

That the training infrastructure ran on Snowflake

This is the biggest omission. It doesn't seem like any of this training took place on Snowflake infrastructure.

That the training was complicated

Hey, if you're trying to flex, you want to make things sound difficult, but that you made them easy. Snowflake kind of did the opposite-

Notably, none of our significant improvements came from a massive expansion of the computing budget. All of our experiments used 8 H100 GPUs!

That's, not a lot. I feel like the flex you want is "hey, we had to do a ton to make this happen but Snowflake made it easy". Instead it came across as, "Instead it came across as, 'hey, we had some GPUs and this problem isn't too hard, so we just experimented until we achieved SOTA results."

That it's generally available

Arctic is a part of the Cortex private preview. If they’re serious, they need to GA something in the AI domain quickly. They might be saving this for Snowflake Summit (now, Data Cloud Summit) but only time will tell.

What does this suggest for the future?

So, Snowflake is moving in an AI direction. They're doing work in the domain. However, they've got a long way to go. Fine-tuning / enabling that for customers - especially enterprises, is going to be critical for enterprise platforms in the Data + AI era.

When Databricks announces fine tuning a FM and that customers can do that but Snowflake announces that they fine tuned an embedding model (something that I did in graduate school), it's not super impressive in comparison.

Now Snowflake isn't going to take this all sitting down. They're going to work towards it, it's just a bigger question if global warming is going to melt the Snowflakes before they can.